Guest Post – Publishing Fast and Slow: A Review of Publishing Speed in the Last Decade

Editor’s Note: Today’s post is by Christos Petrou, founder and Chief Analyst at Scholarly Intelligence. Christos is a former analyst of the Web of Science Group at Clarivate and the Open Access portfolio at Springer Nature. A geneticist by training, he previously worked in agriculture and as a consultant for Kearney, and he holds an MBA from INSEAD.

Publishing speed matters to authors

A journal’s turnaround time (abbreviated as TAT) is one of the most important criteria when authors choose where to submit their papers. In surveys conducted by Springer Nature in 2015, Editage in 2018, and Taylor & Francis in 2019, the TAT of the peer review process (from submission to acceptance) and the production process (from acceptance to publication) were rated as important attributes by a majority of researchers, and were ranked right behind core attributes such as the reputation of a journal, its Impact Factor, and its readership. Based on the results of its in-house survey, Elsevier states that authors ‘tell us that speed is among their top three considerations for choosing where to publish’.

The surveys do not answer what level of speed matters and to whom, but they indicate that authors generally expect their papers to be dealt with expeditiously without compromising the quality of the peer review process.

The ‘need for speed’ has become a pressing matter for major publishing houses, following the ascent of MDPI, which typically publishes papers within less than 50 days from submission, and the further emergence of the preprint, pre-publication platforms that can function as substitutes for journals.

This article explores the TAT trends across ten of the largest publishers (Elsevier, Springer Nature, Wiley, MDPI, Taylor & Francis, Frontiers, ACS, Sage, OUP, Wolters Kluwer) that as of 2020 accounted for more than 2/3 of all journal papers (per Scimago). It utilizes the timestamps (date of submission, acceptance, and publication) of articles and reviews that were published in the period 2011/12 and in the period 2019/20. The underlying samples consist of more than 700,000 randomly selected papers that were published in more than 10,000 journals.

The publication date is defined as the first time that a complete, peer-reviewed article that has a DOI is made available to read by the publisher. As with the other timestamps, it is a case of ‘garbage in, garbage out’, as it relies on the accurate reporting of the timestamps by the publisher.

Publishing has become faster

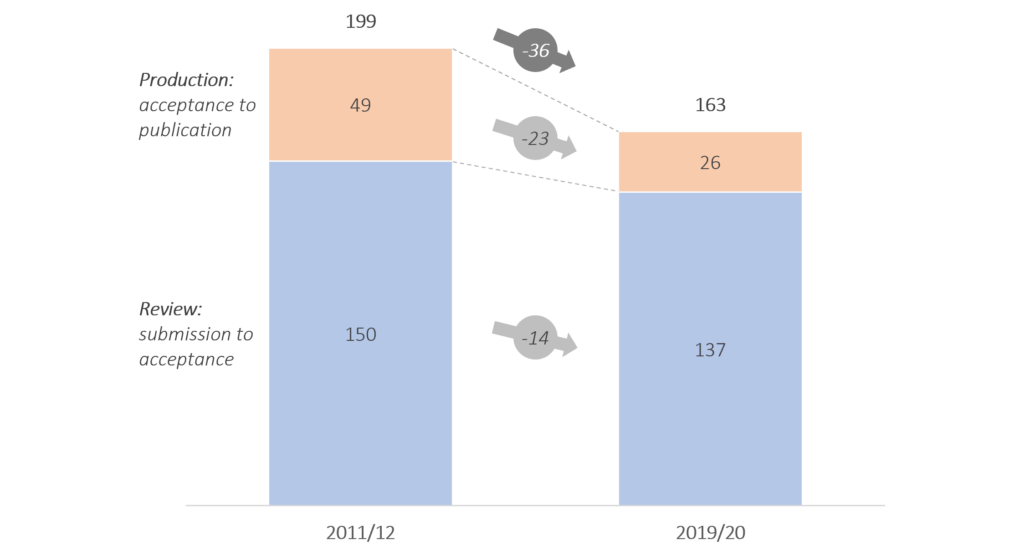

First and foremost, publishing has become faster in the last ten years. It took 199 days on average for papers to get published in 2011/12 and 163 days in 2019/20. The gains are primarily at the production stage (about 23 days faster) and secondarily at peer review (about 14 days faster).

The acceleration from acceptance to publication is due to (a) the transition to continuous online publishing and (b) the early publication of lightly processed pre-VoR (Version of Record) articles. The acceleration at peer review is driven broadly by MDPI and specifically in the space of chemistry by ACS; both publishers stand out for being fast and becoming even faster. Meanwhile most other major publishers have been slower and either remain static or are deteriorating.

Once accepted, papers become available to read faster

In 2011, three of the four large, traditional publishers (Elsevier, Wiley, Springer Nature, and Taylor & Francis) took longer than 50 days on average to publish accepted papers. By 2020, all four of them published accepted papers in less than 40 days.

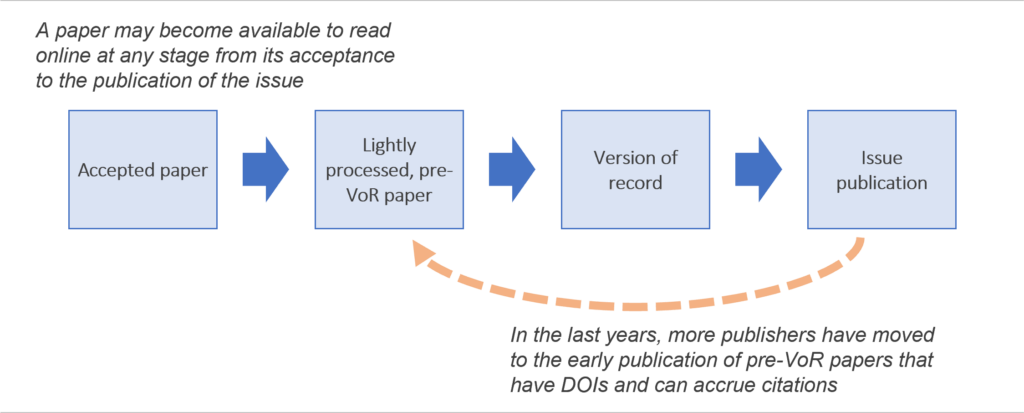

As noted above, the acceleration is partly due to the transition to continuous online publishing. Papers are now made available to read as soon as the production process is complete, ahead of the completion of their issue.

The acceleration is also partly thanks to the early publication of lightly processed pre-VoR articles. Papers may be published online before copyediting, typesetting, pagination, and proofreading have been completed. Such papers can have a DOI that will be then passed on to the VoR, and as a result they can accrue citations.

Barring MDPI, peer review appears static

MDPI is a fast-growing and controversial publisher. It grew phenomenally in the period from 2016 to 2021 and has now cemented its position as the fourth largest publisher in terms of paper output in scholarly journals. MDPI has attracted criticism from researchers and administrators who claim that it pursues operational speed to the expense of editorial rigor.

Indeed, MDPI is the fastest large publisher by a wide margin. In 2020, it accepted papers in 36 days and it published them 5 days later. ACS, the second fastest publisher in this report, took twice as long to accept papers (74 days) and published them in 15 days.

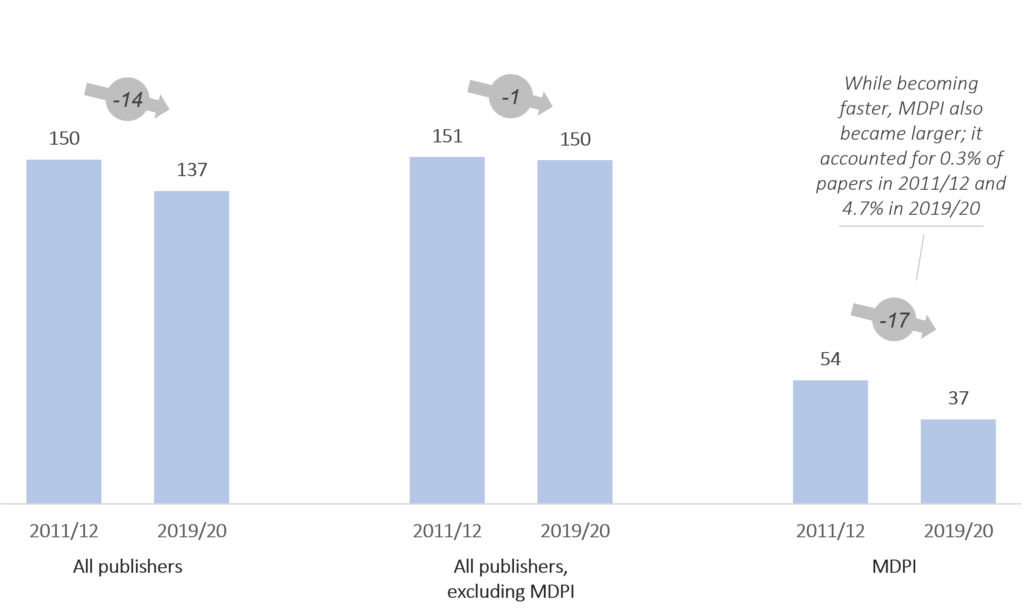

As MDPI became larger, it brought down the overall peer review speed across publishing. Including MDPI, the industry accelerated by 14 days in the period 2019/20 in comparison to the period 2011/12 (from 150 to 137 days). Barring MDPI, review performance did not show much change (from 151 to 150 days).

Peer review by category ranges from 94 days (Structural Biology) to 322 days (Organizational Behavior & Human Resources)

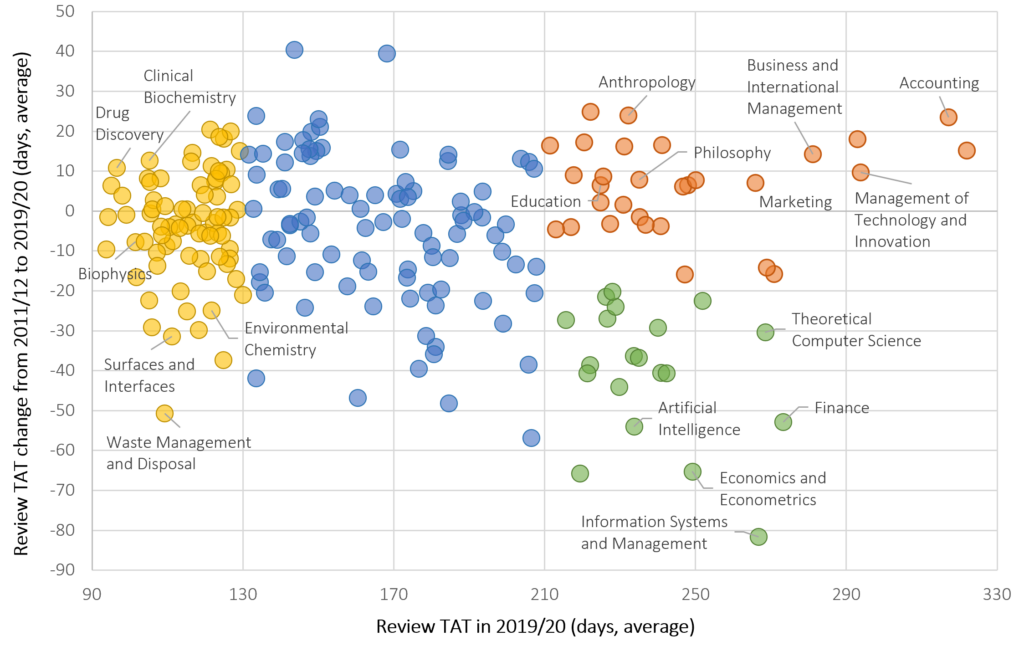

While the speed of peer review appears static in the last years (when excluding MDPI), the assessment of categories reveals a variable landscape. The chart below shows the peer review speed and trajectory by category from 2011/12 to 2019/20 (excluding MDPI) for 217 categories of Scimago (Scopus data) that had a large enough sample of journals (>15) and papers (>120) in each period.

About 35% of categories (77) accelerated by 10 or more days. About 20% of categories (44) slowed down by 10 or more days. The remaining 44% categories (96) were rather static.

Three blocks stand out. The bottom-right, green block shows categories that are slow but accelerating. It includes several categories in the area of Technology and some in the area of Finance & Economics. The top-right, orange block shows categories that have been slow with no notable acceleration. It includes several categories in Humanities and Social Sciences, with those in the space of Management achieving the slowest performance. The left, yellow block shows the fastest categories, where peer review is conducted in less than 130 days and in some cases in less than 100 days. This block includes categories in the areas of Life Sciences, Biomedicine, and Chemistry. Last, the central, blue block includes mostly categories in the areas of Physical Sciences and Engineering.

Slowing down is not inevitable

While MDPI’s operational performance can raise questions about the rigor of its peer review process, the same criticism is harder to apply to ACS, a leading publisher in the field of chemistry and home to several top-ranked journals. Notably, 55 of ACS’s 62 journals on Scimago were ranked in the top quartile, and ACS has 5 top-20 journals in the category of ‘Chemistry (miscellaneous)’, a category that includes more than 400 journals.

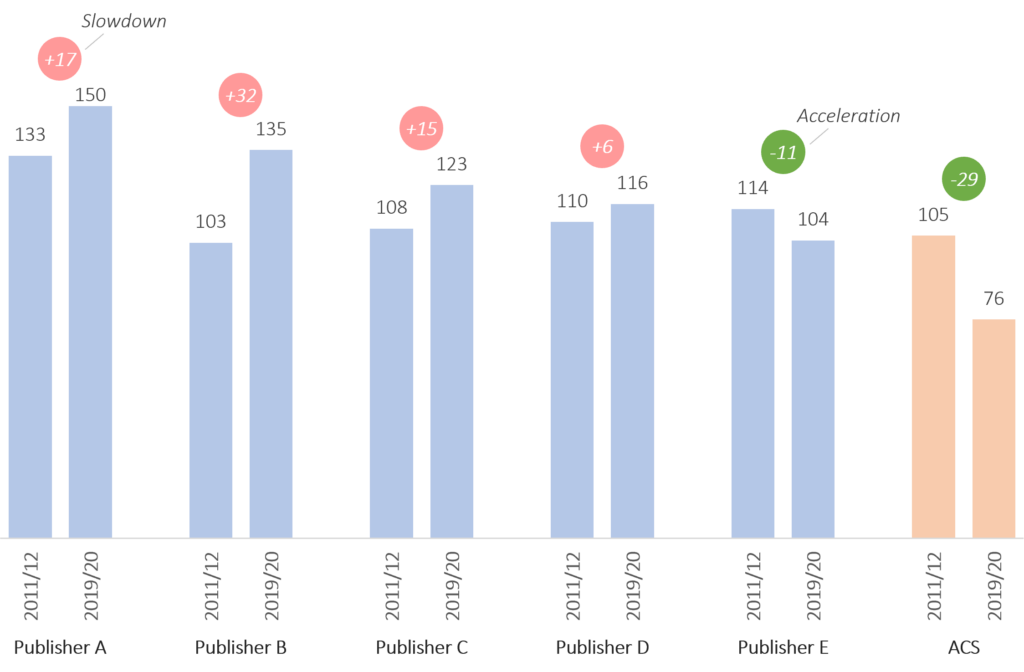

In addition to being selective, ACS is also fast. Across 12 categories that are related to chemistry, ACS accelerated by 29 days from the period 2011/12 to 2019/2020, eventually accepting papers 76 days after submission. Meanwhile, the largest traditional publishers have either maintained their performance or slowed down in the same period, with one publisher slowing down by more than 30 days.

ACS shows that it is possible to have high editorial standards and be fast at the same time. Its performance also suggests that the slow(ing) performance of traditional publishers is not inevitable but the result of operational inefficiencies that persist or worsen over time.

Gaps and coming up next

This article shows the direction of the industry, but it does not address a key question, namely, is there a ‘right’ publishing speed? What TAT achieves the right balance across rapid dissemination, editorial rigor, and a quality outcome? In addition, what TAT do authors consider sensible? And how do perceptions vary across and within demographics, and have they changed as preprints and MDPI set new standards?

I don’t have answers to these questions, but publishers should be able to get some answers by analyzing their in-house data from author surveys, contrasting paper TATs and author satisfaction.

The analysis concludes with 2020 data. It is likely that publishers have improved their performance in response to the rapid growth of MDPI. I do not have data for 2021 and 2022 at this point, but I am in the process of collecting and analyzing it in order to produce a detailed industry report.

TAT information can also be useful to authors, who may wish to take it into account when choosing where to submit their paper. While there are several impact metrics available, publishers are the only source of TAT information, which is provided with gaps and inconsistently. Setting up a platform that provides TAT metrics in the same way that Clarivate’s JCR provides citational metrics will allow authors to make better-informed decisions.

No comments:

Post a Comment