When are journal metrics useful? A balanced call for the contextualized and transparent use of all publication metrics

The Declaration on Research Assessment (DORA) has yet to achieve widespread institutional support in the UK. Elizabeth Gadd

The Declaration on Research Assessment (DORA) has yet to achieve widespread institutional support in the UK. Elizabeth Gadddigs further into the slow uptake. Although there is growing acceptance

that the Journal Impact Factor is subject to significant limitations,

DORA feels rather negative in tone: an anti-journal metric tirade. There

may be times when a journal metric, sensibly used, is the right tool

for the job. By signing up to DORA, institutions may feel unable to use

metrics at all.

The recent Metric Tide report recommended that institutions sign up to the San Francisco Declaration on Research Assessment (DORA).

DORA was initiated by the American Society of Cell Biology and a group

of other scholarly publishers and journal editors back in 2012 in order

to “improve the ways in which the outputs of scientific research are

evaluated”. Principally, it is a backlash against over-use of the

Journal Impact Factor to measure the research performance of individual

authors or individual papers, although its recommendations reach further

than that. Subsequent to the publication of DORA, the bibliometric

experts at CWTS in Leiden published the Leiden Manifesto

(April 2015). This too is set against the “Impact Factor obsession” and

offers “best practice in metrics-based research assessment so that

researchers can hold evaluators to account, and evaluators can hold

their indicators to account”. There is no option to sign up to this.

Having attended a couple of events recently at which it was

highlighted (with something of a scowl) that only three UK HE

institutions had signed up to DORA (Sussex, Manchester and UCL if you’re

interested), I set about trying to ascertain where the UK HE community

had got to in their thinking about this. I created a quick survey and

advertised it on Lis-Bibliometrics,

a forum for people who are interested in the use of bibliometrics in UK

Universities with 533 members, and more recently to the Metrics Special Interest Group

of the Association of Research Managers and Administrators (ARMA) with

again over 500 members. Simply put, I was interested in whether

institutions had signed, why or why not, what they saw as the pros and

cons, and whether they were thinking about developing an internal set of

principles for research evaluation – something else recommended by the

Metric Tide Report. The survey was open between 9 September and 6

October 2015.

Image credit: Johnny Magnusson (Website) Public Domain

Image credit: Johnny Magnusson (Website) Public Domain

Perhaps rather tellingly, only 22 people responded. Twenty-two out ofa potential 1000 – a response rate of about 2%. Now, there could have

been a number of reasons for this: survey fatigue; not reaching the

right audience; wrong time of year, etc., etc. However, as eight of the

22 openly declared that they’d not yet considered DORA (“don’t know

what it is!”, stated one), I think it is more likely that the message

hasn’t got out yet.

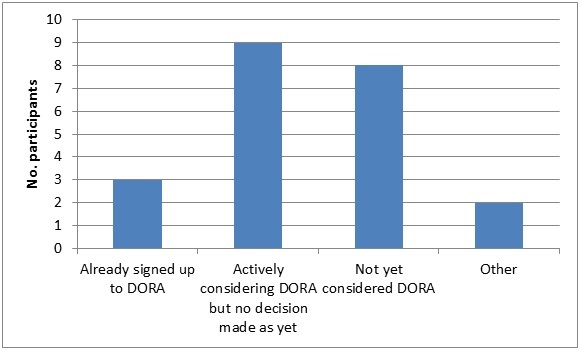

Of the remaining 16, three had already signed up to DORA and nine

were actively considering it. Two other categories were offered

(“actively considering and decided to sign” and “actively considering

and decided not to sign”), but neither of these were selected. In

subsequent free-text comments, two respondents mentioned that

individuals within their organization had signed, and another pointed

out that they were signatories by virtue of being members of LERU.

Figure 1 Which of the following best describes your institution?

The next question asked those that had decided to sign (or not) what

their reasoning was. Although eight responses were received, only one

really outlined their reasons: “It was about the principle(s), rather

than over-evaluation of the precise wording. Also, it was about

commitment to the direction of travel, rather than having to have

everything in place before being able to sign up. Equally, signing up

doesn’t mean we’re against using metrics; the opposite rather, that

we’re wanting to use metrics, but in the right way(s).”

The follow-up question yielded more detail about the pros and cons of

signing as the respondents saw them. There were nine responses.

Amongst the pros were, “making a stand for responsible use of metrics”

and stopping “the use of some metrics that are unhelpful”. Indeed one

respondent felt that having raised the issue with senior managers had

had a positive impact on “research assessment for academic promotion”,

even though their institution hadn’t yet signed. Another hoped that by

signing it would “keep administrators at bay that seek simplistic

measures for evaluating complex issues”.

In terms of the cons, these were more varied. Three respondents were

concerned that as a result of signing, their institution may feel

unable to use metrics at all and, indeed, that the Journal Impact Factor

may actually be a useful metric in the right circumstances. One

respondent was concerned that signing DORA in and of itself would not

bring about institutional culture change and wondered what the process

would be for dealing with those that did not comply. The latter point

may have been implicit in the response of two raising the issue of who

in the institution would actually be responsible for signing (and

therefore, presumably, responsible for monitoring compliance).

Figure 2: Who has been involved in the deliberations?

A question about who had been involved in DORA deliberations at their

institution showed that a wide variety of staff had done so. However,

in the majority were senior University managers (10) followed by Library

and Research Office staff (8). Interestingly there are no librarians

among the original list of DORA signatories, although now at least 88 of

the 12,522 total signatories have Library in their job title. (This

figure may be slightly inflated as I spotted at least one signatory who

was listed three times – perhaps they were a strong advocate?).

It was pleasing to see that academic staff had also played a part in

at least some institutions’ DORA discussions, for when we talk about

research evaluation it is them and their work that we are talking about.

It was perhaps disappointing that academic staff weren’t involved more

often. It was also interesting that in one case a Union representative

had been involved. I think when you consider the implications of DORA

from all these perspectives, it is not surprising that making a decision

to sign is sometimes a long and drawn-out process. Whilst a research

manager might view DORA as a set of general principles – a “direction of

travel” – could you be certain that a Union Rep wouldn’t expect more

from it?

As an example of the aforementioned research manager, I get the point

of DORA – don’t use journal measures to measure things that aren’t

journals, and especially try to avoid the Journal Impact Factor which is

subject to significant limitations.

I’ve no problem with that. However, I’m concerned that DORA could be

mis-interpreted as a directive to avoid the use of all journal metrics

in research evaluation as there are times when a journal metric,

sensibly used, is the right tool for the job. Indeed in a ARMA Metrics

SIG discussion around the DORA survey, Katie Evans, Research Analytics

Librarian at the University of Bath provided a great list of scenarios

in which journal metrics may be a useful tool in your toolkit. I have

her permission to reproduce them here:

- Journal metrics gave the best correlation to REF scores (Metrics

Tide, Supplementary Report II). This suggests that we might want to use

them at a University/Departmental level. That might then filter down to

use to inform assessment of individuals’ publishing records so that

there’s consistency.

- Not all academic journals are equal in quality standards. Looking at

the journal an item has been published can indicate that the work has

met a certain standard. Journal metrics are an (imperfect) indicator of

this.

- Article-level citation metrics are no use for recently published

items that haven’t had time to accrue citations. Journal metrics are

available straight away.

- For publishing strategy – a researcher has some control over where

they publish; it’s a choice they can actively make to aim for a high

quality journal. A researcher doesn’t have this sort of direct control

over article-level citations. So you could argue that it’s fairer to

ask someone to publish in journals of a certain standard than to meet

article-level citation criteria.

tirade. One almost feels a bit sorry for Thomson Reuters (owners of the

JIF) who, in my experience, always seem keen to set out both the value and limitations of bibliometrics in research evaluation.

Personally I feel a lot more comfortable with the Leiden Manifesto

which takes a more positive approach: a balanced call for the sensible,

contextualized and transparent use of all publication metrics. If the Leiden Manifesto was available for signing, I wouldn’t hesitate. So, how about it Leiden?

The views expressed in this piece are my own and do not necessarily reflect those of my institution.

Note: This article gives the views of the author, and not the

position of the Impact of Social Science blog, nor of the London School

of Economics. Please review our Comments Policy if you have any concerns on posting a comment below.

Elizabeth Gadd is the Research Policy Manager

(Publications) at Loughborough University. She co-founded the

Lis-Bibliometrics forum for those involved in supporting bibliometrics

in UK Universities, and is the Metrics Special Interest Group Champion

for the Association for Research Managers and Administrators. Having

worked on a number of research projects, including the JISC-funded RoMEO

Project, she is currently studying towards a PhD in the impact of

rights ownership on the scholarly activities of universities.

Impact of Social Sciences – When are journal metrics useful? A balanced call for the contextualized and transparent use of all publication metrics

No comments:

Post a Comment