Source: https://www.cwts.nl/blog?article=n-r2x254

Do hyphens in article titles harm citation counts?

During the past few weeks, those of us with an interest in citation analysis may have stumbled upon catchy headlines such as Hyphens in paper titles harm citation counts and journal impact factors, One punctuation mark has been skewing our scientific ranking system, and One small hyphen in a title could diminish academic success. These are headlines of online reports covering the paper Metamorphic robustness testing: Exposing hidden defects in citation statistics and journal impact factors by Zhi Quan Zhou, T.H. Tse, and Matt Witheridge, which has been accepted for publication in IEEE Transactions on Software Engineering.

The paper claims that citation statistics reported by Scopus and Web of

Science are unreliable, “as they can be distorted simply by the

presence of hyphens in paper titles”. According to the paper, the

algorithms used by Scopus and Web of Science to match references with

the corresponding cited articles do not work well when cited articles

include hyphens in their titles.

If the authors are right, this could undermine the validity of all citation analyses carried out worldwide based on data from Scopus and Web of Science. However, are the authors right? Elsevier and Clarivate Analytics, the producers of Scopus and Web of Science, both claim that the IEEE paper is flawed. They state that their reference matching algorithms are insensitive to the presence of hyphens in article titles. Of course, the business interests of Elsevier and Clarivate Analytics are threatened by the paper, so they have an interest in rejecting the conclusions of the paper. This makes clear that there is a need for a more independent perspective on the IEEE paper, which we aim to offer in this blog post.

Nevertheless, the IEEE paper is deeply flawed. The authors make basic mistakes in confusing correlation and causality, despite their claim not to make this error. They compare citation counts of articles with and without hyphens in the title. Based on the observation that articles with hyphens in the title on average receive fewer citations than articles without hyphens in the title, they conclude that the reference matching algorithms of Scopus and Web of Science do not handle hyphens properly. The authors analyze a few confounding factors (e.g., the field of research and the publication year of an article) and observe that these factors do not explain the observed differences in citation counts. However, many other confounding factors (e.g., the type of study, for instance methodological vs. empirical studies, or the level of experience of the authors) are not considered, and therefore the strong causal conclusions drawn by the authors are not justified.

Another deeply problematic element in the IEEE paper is the analysis of journal impact factors. The authors claim that journal impact factors are affected by the alleged shortcomings of the reference matching algorithm of Web of Science. However, anyone familiar with the calculation of journal impact factors will understand that this is impossible. To calculate journal impact factors, citations are counted directly at the level of journals, not at the level of the individual articles within a journal. Only the name of the cited journal and the publication year of the cited work matter when counting the number of references citing a particular journal. The approach taken by Clarivate Analytics to calculate journal impact factors is well known and has been subject of considerable debate. Apparently, the authors of the IEEE paper are completely unaware of this.

We also shared our concerns with the Editor-in-Chief of IEEE Transactions on Software Engineering. The editor has invited us to submit a paper to the journal in which we express our concerns. Although we see this as a somewhat inefficient way of addressing the fundamental mistakes made in the IEEE paper, we are indeed considering to submit such a paper. We hope this will lead to a deeper editorial investigation, eventually resulting in a correction or retraction of the paper.

If the authors are right, this could undermine the validity of all citation analyses carried out worldwide based on data from Scopus and Web of Science. However, are the authors right? Elsevier and Clarivate Analytics, the producers of Scopus and Web of Science, both claim that the IEEE paper is flawed. They state that their reference matching algorithms are insensitive to the presence of hyphens in article titles. Of course, the business interests of Elsevier and Clarivate Analytics are threatened by the paper, so they have an interest in rejecting the conclusions of the paper. This makes clear that there is a need for a more independent perspective on the IEEE paper, which we aim to offer in this blog post.

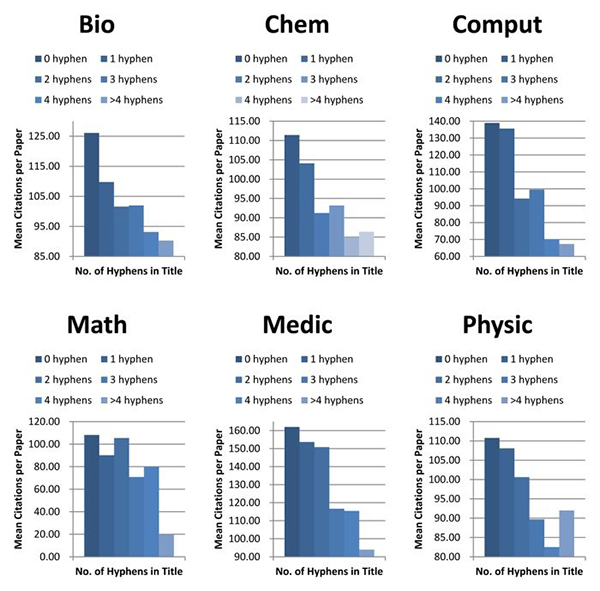

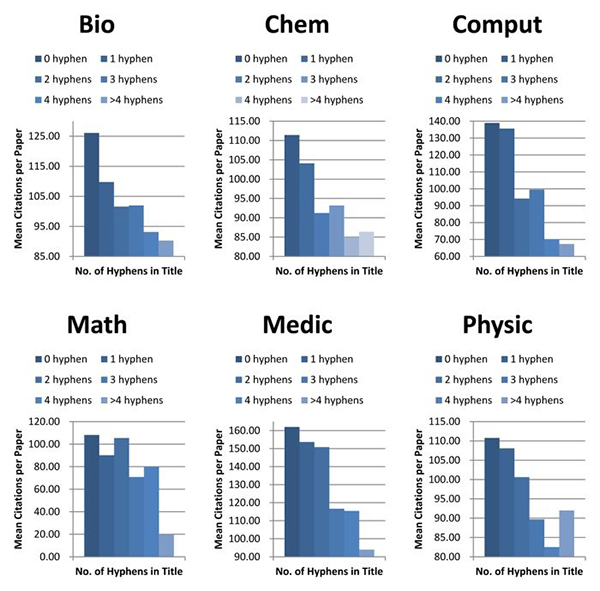

On average, the larger the number of hyphens in the title of an

article, the lower the number of citations received by the article.

(Source: University of Hong Kong)

Should we believe the IEEE paper?

The IEEE paper looks impressive. It has been accepted for publication in a journal that seems to have a good reputation. The paper presents many different analyses, apparently showing the robustness of the authors’ findings. Studies like this one may suffer from confusion of correlation and causality, but the authors explicitly discuss this point, suggesting that they are well aware of this important methodological issue.Nevertheless, the IEEE paper is deeply flawed. The authors make basic mistakes in confusing correlation and causality, despite their claim not to make this error. They compare citation counts of articles with and without hyphens in the title. Based on the observation that articles with hyphens in the title on average receive fewer citations than articles without hyphens in the title, they conclude that the reference matching algorithms of Scopus and Web of Science do not handle hyphens properly. The authors analyze a few confounding factors (e.g., the field of research and the publication year of an article) and observe that these factors do not explain the observed differences in citation counts. However, many other confounding factors (e.g., the type of study, for instance methodological vs. empirical studies, or the level of experience of the authors) are not considered, and therefore the strong causal conclusions drawn by the authors are not justified.

Another deeply problematic element in the IEEE paper is the analysis of journal impact factors. The authors claim that journal impact factors are affected by the alleged shortcomings of the reference matching algorithm of Web of Science. However, anyone familiar with the calculation of journal impact factors will understand that this is impossible. To calculate journal impact factors, citations are counted directly at the level of journals, not at the level of the individual articles within a journal. Only the name of the cited journal and the publication year of the cited work matter when counting the number of references citing a particular journal. The approach taken by Clarivate Analytics to calculate journal impact factors is well known and has been subject of considerable debate. Apparently, the authors of the IEEE paper are completely unaware of this.

How to communicate about mistakes in a scientific paper?

In addition to the issues discussed above, there are various other reasons for rejecting the conclusions drawn in the IEEE paper. Before writing this blog post, we contacted the authors of the IEEE paper and we shared with them our criticism on their work. The comments that we sent to the authors can be found here. We offered to discuss the various comments either by e-mail or in phone call. Unfortunately, the authors declined to engage in any further discussion, indicating that they prefer the discussion to take place through formal channels (i.e., through papers published in IEEE Transactions on Software Engineering). This is a disappointing response. Research should be a joint effort aimed at producing the highest quality scientific knowledge. Writing papers is one way in which researchers can communicate with each other and share new knowledge, but it should not be the only way. A direct personal discussion typically offers a more efficient and more effective way of identifying misunderstandings and correcting mistakes. We regret the unwillingness of the authors of the IEEE paper to engage in such a discussion.We also shared our concerns with the Editor-in-Chief of IEEE Transactions on Software Engineering. The editor has invited us to submit a paper to the journal in which we express our concerns. Although we see this as a somewhat inefficient way of addressing the fundamental mistakes made in the IEEE paper, we are indeed considering to submit such a paper. We hope this will lead to a deeper editorial investigation, eventually resulting in a correction or retraction of the paper.

Can we trust Scopus and Web of Science citation counts?

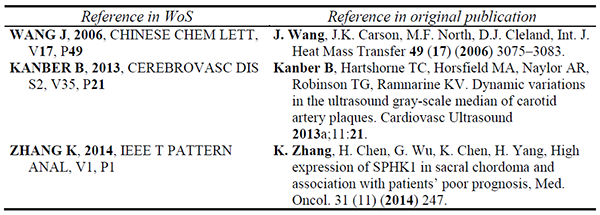

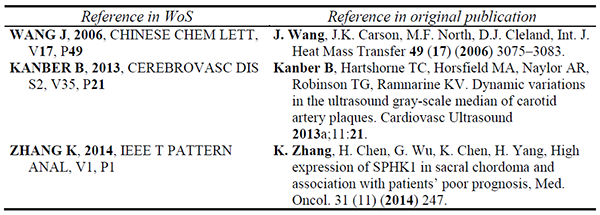

Does this mean we can blindly trust citation statistics provided by Scopus and Web of Science? No. In both databases, citation statistics suffer from problems. This is almost unavoidable. Algorithmic reference matching will never be perfect. However, some problems in the Scopus and Web of Science reference matching algorithms, which we studied in detail in a conference paper published in 2017, require careful attention from the database producers. These problems affect a small but not entirely insignificant share of the references in the two databases. The phenomenon of so-called phantom references in Web of Science, where a reference is replaced by a completely different reference, is perhaps the most important problem (see below for examples of such references). We hope that the renewed attention for the issue of reference matching will encourage database producers to further improve the quality of their algorithms.

Examples of phantom references in Web of Science. (Source: Van Eck & Waltman, 2017, Table 3)