In my last blog post, I tried to identify seminal papers using a variety of methods. These were divided into two main categories.

The first category was to look at text written by other authors mentioning that certain works were seminal.

The

most straightforward way was to search citation statements/context in

scite.ai for keyword phrases like "Seminal works" + Topic.

Many

search engines are now implementing Q&A capabilities which use the

latest state of art large language models (LLMs) such as using OpenAI's

language models - GPTx or opensource ones like Google Flan-T5 to

extract answers. Could we improve on keyword searching and use those

semantic search features instead?

The Second category of methods

is via bibliometrics, and I talked about Connected Papers - "Prior

Papers" feature as well as a somewhat complicated bibliometric technique

called "Reference Publication Year Spectroscopy (RPYS)" which can be

done with a variety of tools, including Bibliometrix and CitedReferencesExplorer (CRExplorer)

All

these methods work decently well, except the method using

Q&A/Semantic search. For whatever reason, the "Ask a question"

feature in scite does not work well. Neither does Elicit.org.

Instead, I have since found two tools - Perplexity and the new Bing which works much better in finding seminal papers or works.

Perplexity - a startup using OpenAI's GPT models

When

I heard about Elicit.org, it was an early partner with access to

OpenAI's APIs. Elicit tries to combine Scholarly search (on Semantic

Scholar's open corpus) with GPT3 and I was extremely excited,, and you

have seen me mention it several times in the past year as I keep close

tabs on it.

I was aware later of

Perplexity which

in a way is the counterpart to Elicit, except it searches the whole web

and then extracts results from the top results. I am not sure which

search engine it uses but I think it's Bing!

Some are you must be thinking, isn't that the same as the improved Bing+chat Microsoft recently launched?

Indeed, the idea is remarkably similar, but the main difference is

Perplexity is free and has been live far longer and you don't need to get onto a waiting list to access it.

I

cover in detail some of the use cases you can use Perplexity for (e.g.

use it to answer specific questions about your library service)

here and

here but for the purposes of this post, we going to focus on using it to find seminal papers or works.

There are two ways to do this. You can just ask Perplexity directly.

The

results aren't perfect but are pretty decent, at least for the first

few citations. You might be troubled by the fact that the sources cited

come from sites like Wikipedia...(you can click on "view list" button to

see the full URL)

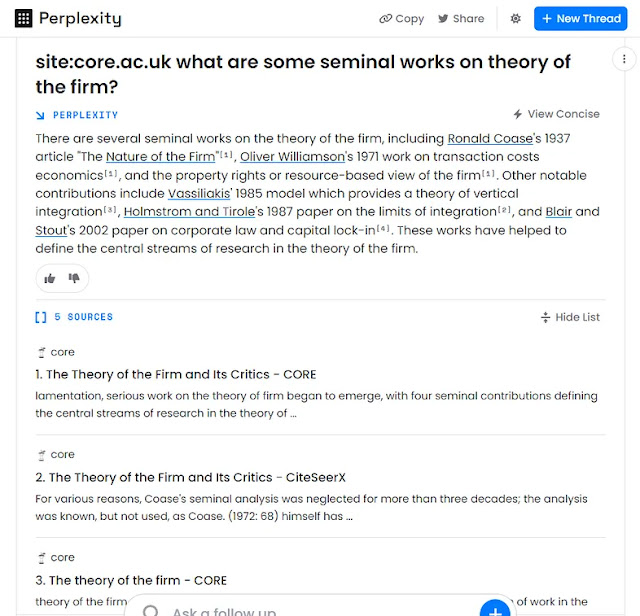

One way around it is to force Perplexity to only pull up results from Scholarly domains with the site: operator.

Forcing

the results to the domain for Google Scholar kind of works but Google

Scholar doesn't itself host papers and you will get results extracted

from title, abstract etc.

I settled on using the following two domains

- CORE (core.ac.uk/)

- Semantic Scholar (semanticscholar.org)

As these are the two largest Open Access sources and host the papers on their domain.

So how do you restrict Perplexity to just results from one domain?

In Perplexity simply type

The

nice thing is all the sources are all open access, so you can click on

the links to check to see that the sources really mention these papers

are seminal.

Do

note that in most cases the sources are not the seminal works itself.

For example, the source saying Coase(1937) is seminal is obviously not

Coase itself! But you can check the source to confirm it says Coase is

seminal.

You get similar decent but not perfect results when restricting over semanticscholar.org

site:semanticscholar.org what are some seminal works on theory of firm?

Before I move on to the new Bing, I would add there is nothing special about this particular use case.

with this technique, you can ask Perplexity any direct question over papers and there's a good chance it can answer.

Here is just one example.

For

this type of question, again the source is not the actual paper -

Piwowar et. al. (2018) that coined the term but a citing paper that

mentioned it. Depending on your query, sometimes the source could be the

paper itself.

Bing+GPT- the engine that caused Google to panic.

I'm

sure you read about how Bing launched a chatbot that combined a search

engine with OpenAI's GPT that caused Google to go into red alert.

Yes,

it's that groundbreaking. In one sense, this promises to be similar to

Perplexity. But in practice, I find the capabilities seem to be a step

even further.

In any case, you can just ask it to find seminal papers.

It not only finds you seminal works but can talk generally about it.

The follow up prompts are really good, you can ask it to compare and contrast approaches

The answer might be not completely spot on.....

The other cool thing is like perplexity you can also restrict your results to specific domain.

Let's start with a definition.

Okay

lets us for studies on it but restricted to just core.ac.uk. The cool

thing is you can just ask it in natural language, and it knows what to

search!

Other things you can try include asking it to

- describe the findings of the paper

- find critiques of a paper

- find papers that agree, support or contradict papers

and many more...

Conclusion

Honestly,

I am blown away by the power of adding a search engine to large

language models. Using Language models alone to write papers or answer

questions often ends up the model making up references.

Once you add a search engine, it rarely does so.

In

a way this makes sense. Asking a language model like ChatGPT to write

an essay unaided is like asking a human to write an essay unaided by

anything but his brain. He may be able to remember a reference or two,

but will sometimes foul up a reference.

Without

a external search to aid it, the language model is relying only on the

things it "learnt" during training which results in weights in its

neutral nets. This is similar to our human brains. "Hallucination" will

occur.

Adding

a search engine so it can "read" and extract answers means it will not

make up references most of the time. But be warned it can still

"misintrepret" what is in those references!

Regardless, I think this technology is a huge game changer and it will only get better....

The

Natural Language Processing capabilities have finally gotten good

enough we now essentially have actual working semantic search that can

extract the answer from papers! This has been something that has been

promised for the last 20 years!

I am sticking my head out to predict that within 5 years at most every search (including library's) will do something like this!

There are many implications to this technology, something I will cover in future post...

No comments:

Post a Comment