Source: https://musingsaboutlibrarianship.blogspot.com/2023/03/how-q-systems-based-on-large-language.html

How Q&A systems based on large language models (eg GPT4) will change things if they become the dominant search paradigm - 9 implications for libraries

Warning : Speculative piece!

I recently did a keynote at the IATUL 2023 conference where I talked about the possible impact of large language models (LLMs) on academic libraries.

Transformer based language models whether encoder-based ones like BERT (Bidirectional Encoder Representations from Transformers) or auto-regressive decoder-based ones like GPT (Generative Pretrained Transformers) brings near human level capabilities in NLP (Natural Language Processing) and (particularly for encoder based GPT models) NLG (Natural Language Generation).

This capability can be leveraged in almost unlimited ways - as libraries can leverage capabilities like Text sentiment analysis, Text classification, Q&A, code completion, text generation for a wide variety of tasks, particularly since such LLMs are so general, they need minimal finetuning (which "realistically be used by researchers with relatively little programming experience") to get them to work well.

This article in ACRL provides a brief but hardly comprehensive series of possible uses - for example, it does not include uses such as OCR correction for digitalization work and other collections related work. Neither does it talk about copyright issues, the role libraries may play in supporting data curation and open source Large Language Models.

Yes, I don't think it takes a futurist to say that the impact of LLMs and workable Machine Learning, Deep Learning is going to be staggering.

But for this article I'm going to focus on one specific area I have some expertise in, the implications of large language models (LLMs) on information retrieval and Q&A (Question and answer tasks) where LLMs are used in search engines to extract and summarise answers from top ranked documents.

I argue this new class of search engines represent a brand-new search paradigm that will catch on and change the way we search, obtain and verify answers.

We have essentially the long promised Semantic Search over documents, where instead of the old search paradigm of showing 10 blue links to possible relevant documents, we can now extract answers directly from webpages and even journal articles, particularly Open Access papers.

What implications will this have for library services like reference and information literacy? Will this give an additional impetus to the push for Open Access? How will this new search paradigm affect relationships between content owners like Publishers and discovery and web search vendors?

1. The future of search or information retrieval is not using ChatGPT or any LLM alone but one that is combined with a search engine.

and this is well and good.

But while large language models will certainly be used in many of the ways described in these articles, in the long run they will not I believe be used for information retrieval, or in the library context reference services.

Instead, the future belongs to tools like the New Bing, Perplexity.ai, Google's Bard which combine search engines with Large Language Models.

Technically speaking many search engines, you use today like Google, Elicit.org might already be using Large Language Models under the hood to improve query interpretation and relevancy ranking. For example, Google already uses BERT models to better understand your search query, but this is not visible to you and is not the LLM use I am referring to. This type of search where queries and documents are converted into vector embeddings using the latest transformer-based models (BERT or GPT-type models) and are matched using something like cosine similarity is often called Semantic Search . Unlike mostly keyword based techniques they can match documents with queries that are relevant even if the keywords are very different. But at the end of the day they still revert to the two decade old paradigm of showing most relevant documents and not the answer.

See simple expexplanationhese tools work in a variety of ways but speaking, they do the following

1. User query is interpreted and searched

How this is done is not important, but more advanced systems like OpenAI's unreleased WebGPT, hooks up a LLM with a Search API and trains it to learn what keywords to use (and even whether to search!), by "training the model to copy human demonstrations" but this is not necessary, and this step could be accomplished with as simple as a standard keyword search

2. Search engine ranks top N documents

Results might be ranked using traditional search ranking algo like BM25,TF-IDF or it might be using "semantic search" using transformer based embeddings (see above). or some hybrid-multiple stage ranking system with more computational expensive methods in later stages.

3. Of the top ranked documents, it will try to find the most relevant text sentences or passages that might answer the question

Again, this usually involves using embeddings (this time at sentence level) and similarity matches to determine the most relevant passage. Given that GPT-4 has a much bigger context window, one can possibly even just use the whole page or article for this step instead of passages.

4. The most relevant text sentences or passages will then be passed over to the LLM with a prompt like "Answer the question in view of the following text".

Why is this better for information retrieval than just using a LLM like ChatGPT alone?

There are two reasons.

Firstly, it is known that LLMs like ChatGPT may "hallucinate" or make things up. One way to counter this is to ask it to give references.

Unfortunately, it is well known by now ChatGPT tends to make references up as well! Depends on your luck you may get references that are mostly real , but most likely you will get mostly fake references.

The authors are wrong though, but the authors are plausible, e.g. Anne-Wil Harzing (of software Hazing Publish or Perish Software) does publish papers on size of Google Scholar vs Scopus but has not to my knowledge published papers that directly estimate size of Google Scholar etc. This is the actual paper that does answer the question in PLOSONE by Madian Khabsa and C. Lee Giles published in 2014.

The same applies for the new Bing,

Both Bing and Perplexity use OpenAI's GPT models via APIs and as such work similarly.

Perplexity even shows the sentence passage used to help generate the answer

Compare ChatGPT to these search engines. Because these new search engines link to actual papers or webpages it doesn't ever makeup references by definition. It can still "misinterprete" the citations of course.

For example, I've found such systems may confuse the findings of other papers mentioned in the literature review portion with what the paper actually found.

Still, this seems better than having no references for checking or fake references that don't exist.

As I write this GPT-4 was released and it seems to generate a lot less fake references. This is quite impressive since it is not using search.

Secondly, even if LLMs used alone without search can generate mostly real citations, I still wouldn't use it for information retrieval.

Consider a common question - that you might ask the system. What are the opening hours of <insert library>.

ChatGPT got the answer wrong. But more importantly without real-time access to the actual webpage, even if it was right the best it can do is to reproduce the website it saw during training and this could be months ago!

Retraining LLMs completely is extremely expensive and as such the data it is trained is always going to be months behind. Even if you simply finetune the full model and not retrain it from the scratch it will still not update as quickly as a real time search.

Adding search to a LLM solves the issue trivially, as it could find the page with the information and extract the answer.

Technically speaking these systems will match documents based on the cached copy the search engine sees, so this can be hours or days out of date. But my tests shows they will actually scrape the live page of these documents for answers, so the answers are based on real-time or at least the information on the page at the time of the user query.

I did notice that Bing+Chat works similarly to ChatGPT and GPT in that it's answers are not deterministic, ie there is some randomness to the answers (technically this is adjusted via a "temperature" variable via the API and is technically set to 0.7, 0 = no randomness)

In fact, most of the time it gets the answer wrong. My suspicion is it's confused by tables.....

2. Search+LLM will catch on quickly even if it is initially not very accurate

3. Decent chat bots for libraries are practical now

Like many industries, Libraries have tried to create chatbots. I have never been impressed with them, they were either simple minded "Select from limited x options" systems that hardly deserved the name bot, or extremely hard to train systems that you would need to specially train for individual questions and would do well only for a very small, limited set of questions.

I believe chatbots are now practical. In the earlier part of my career, I spent time thinking about and implementing chat services for libraries. In general chat services questions relate to the following major classes of questions

1. Queries about details of library services

2. APA questions

3. General Research Questions.

Another category of questions are troubleshooting questions which I did not test. This depends mostly on how good your faqs are on this class of questions. But I have not tried how it reacts to error messages being relayed to it,

Using just the new Bing+Chat which was not optimized for the library chatbot use case, the results are amazing.

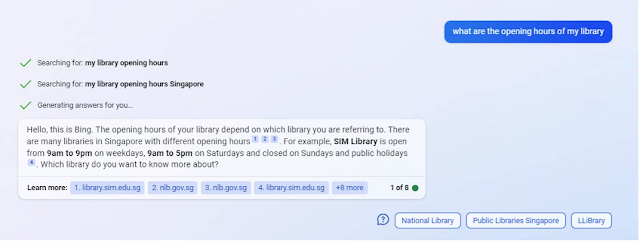

I start off asking Bing about opening hours of my library.

It knows I'm from Singapore and it correctly points out it depends on which library I am talking about.

Again, it finds the right page but misinterprets the table on the page.

Of course, you might think, this isn't a big deal, your library bot can answer this and correctly too. But the difference is here we have a bot that isn't specifically trained at all! All it did was read your webpage and it found the answers. It even has a conversational, librarian-like demeanor!

I know from past tests, chatGPT is pretty good at APA citing, if you give it the right information.

I was curious to see given that Bing+GPT can actually "see" the page you are referring to, it might be able to give you the right citation when you pass it a URL.

The results are disappointing. It is unable to spot the author of the piece. Even when I prompted it to summarise the URL which triggered it to search and "See" the page, when I asked it for APA 7th it couldn't spot the author. But of course when I supplied the author it took that into account.

In general, while it is possible with some work to anticipate the majority of non-research questions and train the bot for it, research questions can be pretty much anything under the sun and is impossible to anticipate.

So it was the performance on the third class of question, research questions that shocked me.

In my last blog post, I talked about how it could be used to find seminal works and even compare and constrast works.

4. Reference desk and general chat usage will continue to decline

Reference desk queries have been falling for over a decade. Mostly due to the impact of Google and search engines. These new class of search engines may be the final nail in the coffin.

Don't get me wrong, this does not mean - Reference is dead, it just means the general reference desk or even general chat will be in further decline.

The problem with the general reference query is this. Even if you are the most capable reference librarian in the world, there is no way you can know or be familiar with every subject under the sun.

The nature of general reference is that when a question is asked, unless it is a question or topic you are somewhat familiar already (unlikely if you are in a University that spans many disciplines), if you try to answer the question (and you usually do as a stop gap), you will typically be googling and skimming frantically to find something that might somewhat help before you pass it on to a specialist who might have a better chance of helping.

But with this new search tool, it is doing what you are doing but faster! Look at the query below.

It was basically looking at the appropriate webpages for answers and is the penultimate answer it was still smart enough to suggest alternative sources and when I asked how to do it in Bloomberg it even gave me the command directly.

And more amusingly, the answer came from a FAQ written by my librarian colleague. I would have found same but nowhere has fast.

Of course, for in-depth research queries, a specialist in the area would probably outdo the new Bing, as the answers to questions asked tends to require nuanced understanding beyond current chatbots.

That is why it is well known the toughest, "highest tier" research questions go directly to specialists not via general reference or chat.

For example, some of the toughest questions I get relate to very detailed questions on bibliometrics, search functionality etc that I get direct from faculty who are aware of my expertise. These are unlikely to be affected.

But asking such questions to a random library staff at general reference or chat is futile, because the chance it reaches someone who has a realistic chance of answering the specific research question is extremely low. In such a case, the average librarian isn't likely to outperform the new Bing.

5. Information needs to be discoverable even more (in Bing!) and a possible new Open Citation Advantage?

6. There will be huge information literacy implications beyond warning about fake references

But let's focus on the main theme of this blog post, a new search paradigm where search engines not just rank and display documents but also extract answers and displays them. What information literacy implications are there?

It has been suggested that "prompt engineering" or coming up with prompts to produce better results is essentially information literacy. I'm not too sure if this makes sense, since the whole point of NLP (natural language processing) is you can ask questions in nature language and still get good answers.

More fundamentally, users need to be taught how such search technologies are different from the standard "search and show 10 blue links" paradigm which has dominated for over 20 years.

How do the standard methods we teach like CRAAP or SIFT change if such search tools are used? Should we try to triangulate answers from multiple search engines like Bing vs Google Bard vs Perplexity etc?

7. Ranking of search engine results become even more important

There's also a practical issue here. In the old search paradigm, you can easier press next page for more answers. How do you do it for the new Bing? I've found sometimes you can type "show me more" and it does get answers from lower ranked pages but this isn't intuitive.

All in all, it seems even more important for such systems to rank results well. But how should they do it?

In OpenAI's unreleased WebGPT, they hooked up a GPT model with Bing API and it was trained to know how to search in response to queries and when and what results to extract. They ran into a problem that every information literacy librarian is aware of.. how to rate the trustworthiness of sources!

Should librarians "teach" LLMs like GPT how to assess quality of webpages? The OpenAI team even hint at this by suggesting the need for "Cross-disciplinary research ... to develop criteria that are both practical and epistemically sound".

In the short run though, I hope such systems allow us to create list of whitelist and blacklist of domains to include or exclude results.

8. Long term use of such tools might lead to superficial learning.

9. Relative Importance of deep expertise increases

I was recently writing an article for work on the CRediT taxonomy and it took me around 30 minutes to write it all out. I later realized I could have just use ChatGPT or even better the new Bing and it would generate perfectly serviceable writings.

It wasn't better than what I wrote mind you. Just much faster. On hindsight, I probably should have realized that there are tons of pages written on the CRediT taxonomy on the web and these systems can of course generate good writings on the topic. I could add some value by customizing it to give local examples and make some particular points but otherwise I should have started with auto-generating the text on the standard parts.

The lesson to take is this, if all your knowledge is something that is so general, the answer can be found on webpages, you are probably in trouble....

For example, when I was writing the blog post on techniques for finding seminal papers, I checked with perplexity, ChatGPT to see if there were any interesting techniques but the best it could do is to state obvious answers and not the fairly novel idea I had in mind of using Q&A systems.

After I wrote up my blog post, I used Bing to ask the same question. It's first answer was roughly the same, but when I asked it to show more methods it eventually found my newly posted blog post!

The point of this story is that it shows how important it is to have deep or at least unusual expertise with ideas or points that aren't available on the web. Of course, the moment you blog or capture it in the web, it can now be found and exploited!

But if everyone is using these new tools to extract or summarise answers, how will they gain the deep expertise to provide new insights and ideas? Someone has to come up with these ideas in the first place!

Conclusion

I'm poor at conclusions, so I will let GPT-4 have the last word.

Add a comment