GPT4 defines prompt engineering as

the

process of creating, designing, and refining prompts or questions that

are used to generate responses or guide the behavior of an AI model,

such as a chatbot or a natural language processing system. This involves

crafting the prompts in a way that effectively communicates the desired

information, while also considering the AI's capabilities and

limitations to produce accurate, coherent, and relevant responses. The

goal of prompt engineering is to improve the performance and user

experience of an AI system by optimizing the way it receives

instructions and delivers its output.

Or as Andrej Karpathy a Computer Scientist at OpenAI puts it

The hottest new programming language is English.

If

you are a librarian reading this, it is likely you have wondered, isn't

this a little like what librarians do? When a user approaches us at a

reference desk, we are trained to do reference interviews

to probe for what our users really want to know. In a similar vein,

evidence synthesis librarians help assist with Systematic reviews in

developing protocols, which include problem formulation as well as crafting of search strategies. Lastly from the information literacy front, we teach users how to search.

In other words, Are librarians the next prompt engineers?

As I write this, there is even a published article entitled - "The CLEAR path: A framework for enhancing information literacy through prompt engineering", though this doesn't really teach one how to do prompt engineering as typically defined.

My first thought on prompt engineering was skepticism.

This post will cover some of the following

- While

there is some evidence that better prompts can elicit better outputs,

is there truly a "science of prompting" or is it mostly snake oil?

- If

there is something that is useful to teach, is it something there that

librarians are potentially qualified and capable to teach without doing a

lot of upskilling? Or is it something that is out of reach of the

typical librarian?

At points, I try to draw parallels with the now well-established task in teaching how to search and see how well they hold up.

To

anticipate the conclusion, my current view is that on the whole we

probably might want to be involved here, particularly if nobody else

steps up. This is despite us being hazy on how effective prompt

engineering can be.

Is there a science of prompting?

As

librarians, we are always eager to jump in and help our users in every

way we can. But I think we should be careful not to fall for hype and

jump on bandwagons at the drop of a hat. The reputation of the library

is at stake, and we do want librarians to teach something that was

mostly ineffective.

I admit my initial impression of "prompt

engineering" was negative because it seemed to me there was too much

hype around it. People were going around sharing super long complicated

prompts that were supposedly guaranteed to give you magical results by

just editing one part of the prompt.

As Hashimoto notes a lot of this is what he calls "blind prompting"

"Blind

Prompting" is a term I am using to describe the method of creating

prompts with a crude trial-and-error approach paired with minimal or no

testing and a very surface level knowledge of prompting. Blind prompting

is not prompt engineering.

These types of "blind

prompts" often feel to me more like magic incarnations, that someone

found to work once, and you just copy them blindly without understanding

why they work (if they do at all). Given how much of a black box

neutral nets (which transformer-based language models are), how likely

is it that we are sure a certain crafted prompt works better when we

don't even understand why it might?

Another reason to be skeptical

of the power of such long magical prompts is from Ethan Mollick, a

professor at Wharton who has been at the forefront of using ChatGPT for

teaching in class.

In an interesting experiment,

he found that students who adopted a strategy of going back and forth

with ChatGPT in a coediting or iterative manner got far better results

when trying to write an essay, than those who adopted simple prompts or

those who did long complicated prompts at one go.

This makes

sense and parallels experiences in teaching searching. In general,

slowly building up your search query iteratively will usually beat

putting all the search keywords in one go particularly if this is an

area you do not know.

This isn't evidence against the utility of

prompt engineering, just a caution about believing in long magical

prompts without iterative testing or strong evidence.

Looking at Prompt engineering courses and guides

To give prompt engineering a fair chance, I looked at some of the prompt guides listed by OpenAI themselves at OpenAI cookbook.

These guides included

I

also looked through courses on Udemy and Linkedin Learning. The courses

on prompt engineering for the former looked more substantial, so I

looked at

Here's

what I found. To set the context, I have immediate level knowledge on

how Large Language Models work and have been reading research papers on

them (with varying amounts of comprehension) for over a year, as such I

didn't really learn much in these content that I didn't already know.

(This isn't necessarily a terrible thing!)

The good thing is all

the prompt guides and the courses I looked at covered remarkably similar

ground. This is what you expect if you have a solid body of theory. So

far so good.

Most of them would cover things like

Reading some of these prompt guides gave me remarkably similar vibes as guides for doing evidence synthesis for example -

Cochrane Handbook for Systematic Reviews of Interventions where the best practice for prompting is often followed by a academic citation (see below for an example).

A

lot of the advice given for prompt engineering tends to be common

sense, be specific, give it context, specify how you want the output to

be etc. Unless the language model is a mind reader it won't know what

you want!

But is there all to prompt

engineering? Are there specific prompting techniques that are particular

to the use of large language models like ChatGPT and have evidence

behind them to suggest they improve performance? Ideally, we should even

be able to understand why they work based on our understanding of the

transformer architecture.

Similarly, we know from decades of practice and research, a strategy of

a. Breaking a search intent into key concepts

b. Combining synonyms of each concept with an OR operator

c. Chaining them all together with a AND operator

is likely to increase recall, particularly if further covered with citation searching.

Do we have something similar for prompt engineering?

From what I can tell, exploiting in-context learning seems to be one commonly used technique.

The basic technique - In-context learning

However,

GPT-3 showed that when the models got big enough, one could improve

results for some tasks by typing examples in the prompt in plain text

and it would "learn" and do better, compared to not giving examples.

This is known as in-context learning.

As

shown in the example above, for a translation task you can type in one

example in the prompt (one shot) or many examples (many shot). Of

course, one could give no examples, and this would be known as zero shot

prompting.

This is what makes prompt engineering

possible, since you just type in prompts, there is no machine learning

training at all! Of course, unlike fine tuning which changes the weights

of the models leading to a permanent alteration, prompt engineering

with examples will only be temporarily "learnt" and the change will not

persist once the session ends.

Best way to give examples in prompts

Besides

the observation that few-show learning works, are there any best

practices in the way we craft the prompts when giving examples?

keep

the selection of examples diverse, relevant to the test sample and in

random order to avoid majority label bias and recency bias.

This

is to say if say you are doing a sentiment analysis task on comments

and you are doing few-shots prompting, you might not just want to give

examples that cover all three categories of values say "Positive",

"Negative", "Neutral" but also the numbers of the examples reflect the

expected distribution. E.g. If you expect more "positive" comments, your

examples in the few-shot learning should reflect that.

The example should be also given in random order, according to another paper.

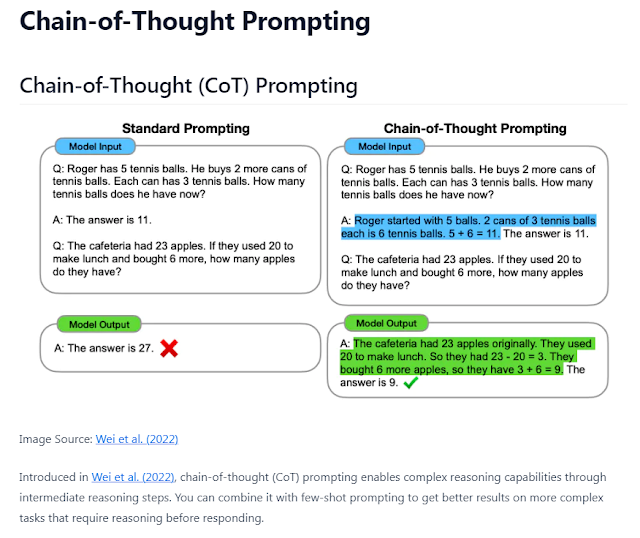

More advanced prompt technique - Chain of thought Prompting

Another famous prompt engineering technique most people would have heard of is Chain-of-Thought Prompting.

The chain of thought prompt is based on the observation that if you provided examples of how to reason in the few shot prompts the Language Model would do better at many reasoning tasks as opposed to giving the answers directly in the examples.

I call these tips observations, but they are in fact results from papers (typically preprints on Arxiv).

The

reasoning why this works is roughly, if you guide the LLM to try to

reason its way to an answer it might do better than if it tried to get

to the answer immediately. I've seen it explained as "you need to give

the LLMs more tokens to reason".

This makes more sense if you

understand that the popular GPT type language models are auto-regressive

decoder only models and they generate tokens one by one and unlike a

human they cannot "go backwards" once they have generated a token. The

prompt "Let's think step by step" is meant to "encourage" it to try

solving the solutions in small steps rather than try to jump to the

answer in one step.

This is also why changing the order by prompting the model to answer the question and then give reasons why is unlikely to help.

For example, the following prompt is bad or at least will not get you the advantage of Chain of Thought

You

are a financial expert with stock recommendation experience. Answer

“YES” if good news, “NO” if bad news, or “UNKNOWN” if uncertain in the

first line. Then elaborate with one short and concise sentence on the

next line. Is this headline good or bad for the stock price of company

name in the term term?

Skeptical of the science of prompts?

While

it is good that these prompt guides are mostly quoting advice from

research papers, as an academic librarian, you are of course aware that

any individual finding from a paper even a peer reviewed one should be

treated with caution (never mind the ones being quoted in this field are

often just preprints).

While I have no doubt the general

practice of COT and few-shot learning works (there is far too much

follow up and reproduced research), many of the specific techniques

(e.g. the way you specify examples) might be on shaky ground given they

tend to have much less support for their findings.

Take for example, the advice you should do role prompting,

e.g. "You are a brilliant Lawyer". I have even seen people try "You

have a IQ of 1000" or other similar prompts. Do they really lead to

better results? In some sense, such prompts do give the model more

context, but it is hard to believe telling it to "Act like a super

genius" will really make it super brilliant. :)

To make things

worse, while we can understand why things like "Chain of Thought" and

variant works, a lot of the advice quoted from papers have findings that

as far as I can tell are purely empirical and we do not understand why

they work.

For example, as far as I can tell, tips for Example Selection and Example Ordering are just empirical results found, knowledge of how LLM works makes you none the wiser.

This is owing to the black box nature of Transformers which are neural nets.

Do the findings even apply for the latest models?

Another

problem with such prompt engineering citations is they often are tested

on older models than what is state of art because of a time lag. For

example, some papers cited are using ChatGPT (GPT3.5) or even GPT3.0

models rather than GPT4 which is the latest at the time of writing of

this post.

As such are we sure the findings generalize across LLMs as they improve?

For

sure, a lot of the motivation for these invented special prompt

techniques was to solve hard problems that earlier models like GPT-3, or

ChatGPT-3.5 could not solve without prompt engineering. Many of these

problems, work in GPT-4 without special prompts. It might be also some

of the suggested prompt engineering techniques might even hurt GPT-4.

For example, there is some suggestions that role prompting no longer works for new models.

We

also assume everyone is using OpenAI's GPT family for both researching

of prompt engineering and for use and there is a greater likelihood the

findings are stable even for newer versions. In fact, people might be

using Opensource LLMA models or even "Retriever augmented Language Models" like Bing Chat or ChatGPT with browsing plugin.

Given

how most of the advice you see is based on research assuming you are

using ChatGPT without search, this can be problematic if one started to

shift to say using Bing Chat, or even ChatGPT with browsing plugin. Take the simplest question, does COT prompts help with Bing Chat? I am not sure there is research on this.

A rebuttal to this is that when you look at evidence synthesis guides like Cochrane Handbook for Systematic Reviews of Interventions it cites even older evidence and search engines have also changed a lot compared to say 10 years ago. (Again, think how some modern search engines now are often not optimized for strict Boolean or even use Semantic Search and do not ignore stop words)

One

can rebut that rebuttal and say even if search engines change, they are

a mature class of product and unlikely to change as much as Language

Models which are new technology.

In conclusion, I don't think we

have enough evidence at this point to say how much prompt engineering

helps, though I think most people would say in many situations it can

help get better quality answers. The fact neural net based transformers

lack explain-ability, making it even harder to be sure.

If there

is a "science of prompting", it is very new and developing, though it is

still possible to teach prompt engineering if we ensure we send out the

right message and not overhype prompt engineering.

How hard is it for librarians to teach prompt engineering?

Understanding

the limitations of the current technology - Transformer based, Decoder

only language model will help with prompt engineering. I already gave an

example earlier on why understanding the autoregressive nature of GPT

models helps you understand why Chain of Thought prompting works.

But

how deep does this knowledge go to effectively do prompt engineering?

If it is too deep, librarians without the necessary background would

struggle.

Do you need to learn the ins and outs

of the transformer architecture, master and understand concepts

/modules like Masked Multi-Head Attention, positional encoding or even

understand the actual code?

Or

do you just need a somewhat abstract level understanding of embeddings,

idea of language models, and how GPT models are

pretrained/self-supervised learning with large amount of text on the

prediction next token task.

Or perhaps, you don't even need to know all that.

Most

prompt engineering guides and courses try to give the learner a very

high-level view of how LLMs work. Compared to what I have been studying

on LLMs, this level of understanding they try to impart is quite simple

and I wager most librarians who are willing to study this should be

capable of reaching the necessary level of expertise.

Why

don't you need to know very deep knowledge of LLMs to do prompt

engineering? Part of it is the same reason you can teach effective

Boolean searching without really needing to know the details of how

search engines work. How many librarians teaching searching really

understand how Elastic search, inverted indexes etc. work? Many would

not even remember how TF-IDF (or the more common BM25) works even if

they were exposed to it once in Library School class years ago. I

certainly didn't.

It also helps that LLMs are very much black boxes.

While

in some cases general understanding of how decoder only Transformer

based models works helps you understand why some techniques work (see

above on general reasoning for COT prompting), some techniques and

advice given - if there is a reason, looks opaque to me.

For example, as far as I can tell, tips for Example Selection and Example Ordering are just empirical results found, knowledge of how LLM works makes you none the wiser.

Advanced prompt engineering techniques need basic coding

That

said when you look at the Prompt engineering techniques it splits into

two types. The first type is what I already mentioned, simple prompts

you can manually key into the prompt and use.

However advanced prompt found requires you to make many multiple prompts that are often unrealistic to do by hand. For example, Self-Consistency prompting requries you to prompt the system multiple times with the same prompt and take the majority answer.

Other even more advanced prompts like ReAct1(reason, act),

are even more complicated, requiring you to type in multiple prompts

including getting answers from external systems that will be too

unwieldly to do manually.

https://learnprompting.org/docs/advanced_applications/react

Realistically these types of techniques involve the use of OpenAI's APIs in scripts and/or use of LLM frameworks like Langchain to automate and chain together multiple prompts and tools/plugins.

They

are mostly quite easy to do if you have beginner level understanding of

coding (often all you need is to run a Jupyter Notebook) but not all

librarians are capable of that.

How much is there to teach? Will users benefit?

Assuming

we keep to standard prompt engineering with prompts that are meant to

be manually typed in the web interface, how much content is there really

to teach? Is prompt engineering so intuitive and obvious there is no

pointing teaching it?

My opinion is that one could cover the

main things on prompt engineering fairly quickly say in a one-shot

session. Could users learn prompt engineering themselves from reading

webpages? Of course, they could! But librarians teach Boolean operators

which could also be self-taught easily, so this isn't a reason not to

teach.

I also think there might be an argument on why we should

teach prompt engineering basically because it is so different from

normal search.

While trying out formal prompt engineering, I found my

instincts warring with myself when I was typing out the long prompts

based on various elements that prompt engineering claims will give

better results.

Why is it so? I suspect it is

ingrained in me when doing search queries to remove stop words and focus

on the key concepts, so it feels odd to type out such long prompts. If

it feels as unnatural to me as to most users who are also used to search

engines, this again points to the need for librarians to educate.

Lastly, while there is currently hype about how Prompt engineering could give you 6 figure jobs , Ethan Mollick disagrees and thinks there is no magic prompt and prompt engineering is going to be a temporary phase and will be less important as the technology improves.

He even quotes OpenAI staff.

I

agree and one way to think about it is this. Will knowledge of Prompt

engineering be more like knowing how to use email/Office which everyone

knows (but with different degrees of skill) or will it be something like

Python coding not known to most people at all.

I

think it's the former and there's some utility for librarians to learn

and teach prompt engineering in short workshops in the short term.

Conclusion

My current tentative conclusion is that there might indeed be something there in prompt engineering for librarians.

This

is particularly so since many institutions are turning to librarians

anyway with the idea that we are capable of guiding users here.

The

main issue with librarians claiming expertise here is that this is a

very new area, and I am personally not comfortable claiming I am an

expert in prompt engineering and my users should listen to me. I believe

if librarians are to do this, we should ensure users are aware this is

as new to us as to them and we also learn together with them.

I

also worry we will be asked to show prompt engineering in domains we

are not familiar with and hence be unable to easily evaluate the quality

of output.

Add a comment